Interview#

For details about the Appier interview process, please refer to:

In summary, after learning about the intern team, I can only say that getting the opportunity for a summer internship at Appier as a rising junior was really fortunate!

About the Summer Internship Program#

Why do I call it a “program”?

On my first day of the internship, there happened to be a training session gathering all summer interns. All subsequent activities related to the summer internship were referred to as the “Summer Internship Program.” This year marked the 3rd year of Appier’s summer internship program.

The summer internship program mainly consisted of three parts:

- Training (Orientation Training)

- The project itself

- Project sharing

Orientation Training#

Though it’s called training, it was actually a very relaxed event and happened to take place on my first day.

The session mainly introduced the key activities of the summer internship program, the application status over the years (apparently, there were over 600 applications this year), company culture, self-introductions from the interns, and time for open networking.

Composition of the Intern Team#

There were about 15 summer interns that day. After hearing everyone’s self-introductions, a rough estimate showed that around 10 of them were either NTU students, planning to study abroad, or studying overseas and had returned to Taiwan. There was even a high school graduate heading to MIT @jieruei Orz.

A funny thing that day was that almost everyone knew someone else in the internship program. For example, I was high school classmates with @m4xshen, and I knew @vax-r from NCKU’s GDSC. Many others mentioned that they also knew another intern from before, often from previous AppWorks Camp interactions. It made the odds of getting picked (15 out of over 600) seem a bit less daunting XD.

Onboarding#

I actually started my internship in the second week of July.

Around May or June, HR contacted me to see if I could start a week later than planned. The original start date was in the first week of July. This was mainly because too many people were onboarding that first week, and they wanted to spread it out. Since I had a relatively flexible summer internship timeline (ending in the first week of September), they suggested I start later. Many other interns had to wrap up by mid-August.

During my first week, I spent most of the time waiting for access to the relevant permissions. I spent time reading internal documents related to Data Engineering, attending team meetings, and reviewing the existing codebase.

The Project#

Each intern worked on different projects in various departments, depending on the needs of their department at the time.

I was a Backend Intern in the Data Platform department. My project could be understood as an “Operation Dashboard.” This dashboard wasn’t like Grafana or Kibana monitoring dashboards.

It was a Web Dashboard provided by the Data Platform department for use by upstream and downstream departments, integrating with Appier’s OAuth Authentication and internal Authorization. The dashboard allowed authorized departments to:

- Modify Configurations related to the Data Platform: e.g., adjust the number of workers for a particular dev role in the XX Team.

- View the Status of the Data Platform: Check current datasets and provide relevant filters.

- Automate Operation Tasks: Actions that previously required opening a ticket with the Data Team could now be done directly through the dashboard.

- Keep Records of the Above Operations with Audit Logs: For accountability and tracking purposes.

Solved Problems#

The main scenarios addressed are as follows:

XX Team’s on-call needs to urgently increase the number of Workers

- Previously, this required contacting the Data Team to change the configuration.

- If no one in the Data Team noticed, it could result in a service interruption for XX Team.

- Now, changes can be made directly on the Dashboard without needing the Data Team to adjust the configuration.

- XX Team’s on-call can operate directly, with Audit Log records.

XX Team submits an Operation Task

- Previously, a ticket had to be submitted to the Data Team for handling.

- Generally, such tasks could be resolved by running a short script.

- However, the Data Team might be occupied with more urgent tasks or in meetings.

- Now, tasks can be managed directly on the Dashboard without needing the Data Team.

- This saves time for both parties.

Technical Aspects#

System Architecture#

The Operation Dashboard is developed using Streamlit as both front-end and back-end.

It uses Postgres to record User Authorization.

There is an additional Cronjob to regularly sync Appier’s Authorization relations into Postgres.

Additionally, as mentioned earlier, the Dashboard needs to call the Kubernetes API to adjust configurations or call internal Data Platform APIs to display status.

Framework Selection#

There were other teams that previously developed similar dashboards using Streamlit.

Streamlit is a framework for developing web apps in Python, primarily used for presenting data visualizations or results of machine learning models (it provides extensive support for Pandas, Matplotlib, Plotly).

It allows development using only Python without the need for additional front-end expertise, which was suitable since the Data Platform department lacks front-end resources.

Therefore, Streamlit was chosen for development.

Streamlit Router Framework#

Since the Dashboard is primarily used as a front-end, the native multi-page: st.navigation provided by Streamlit requires each page to be written in a single Python file.

This makes code tracing in an IDE inconvenient (you can’t directly jump to a definition block using shift + click).

So, I developed a decorator-based framework based on st.navigation.

This allows different pages to be written in the same Python file and provides a Router for registering different pages, making code tracing easier.

Those familiar with FastAPI or Flask might find this approach more intuitive.

import streamlit as st

from framework import Router

router = Router(prefix="/dashboard")

@router.page("/config")

def config_page():

st.write("Config Page")

@router.page("/status")

def status_page():

st.write("Status Page")

This is a sample usage of the framework.

Authentication#

Authentication determines whether a user is logged in.

Since the Dashboard is intended for use by upstream and downstream departments, it needs to integrate Appier’s OAuth Authentication and internal Authorization.

Authentication is implemented using Google OAuth.

However, due to limitations in Streamlit’s framework, only internal sessions can be used to track login status, rather than using JWT + HTTP Only cookies.

Authorization#

Authorization determines whether a user has the right to perform a certain action.

Starting from scratch to maintain an authorization system for all Data Platform-related departments would:

- Increase maintenance overhead for the Data Platform Team.

- Potentially cause discrepancies with Appier’s existing Groups-Users relations.

To simplify the process, the Data Platform Team only needs to maintain the mapping between Groups-Users and Actions.

Therefore, a Cronjob periodically syncs Appier’s Groups-Users relations into Postgres.

The Dashboard checks this Postgres database to determine authorization.

The ER Diagram of the Authorization in Postgres would look like this.

Project Presentation#

The most important part of the Summer Internship Program is a 30-minute English project presentation.

(However, you can use Google Slides to supplement your speech)

This is followed by a 5-minute Q&A session, also conducted entirely in English.

Each summer intern shares their project in the last week of their internship, with almost all presentations being conducted in pairs.

All Appier employees are welcome to attend these presentations.

They take place in a physical conference room, allowing interested parties to join either in person or virtually.

This is a great opportunity to learn about the services maintained by other departments.

Team and Mentorship#

As for the Data Platform Team.

Since it isn’t a Product Team, there are no frontend specialists, only Backend and Data Engineers.

I had two mentors:

One primarily mentored me on the summer internship project.

The other mainly mentored me on the API refactoring project.

(This is mentioned later in the #About Long-term Internship)

Everyone was very friendly!

They were always willing to share knowledge about Data Engineering, system architecture, and their experiences.

Work Environment & Food#

Office

The office is located near Xiangshan Station in the Xinyi District, Taipei.

You can clearly see Taipei 101.

Some floors even have spiral staircases for moving between floors.

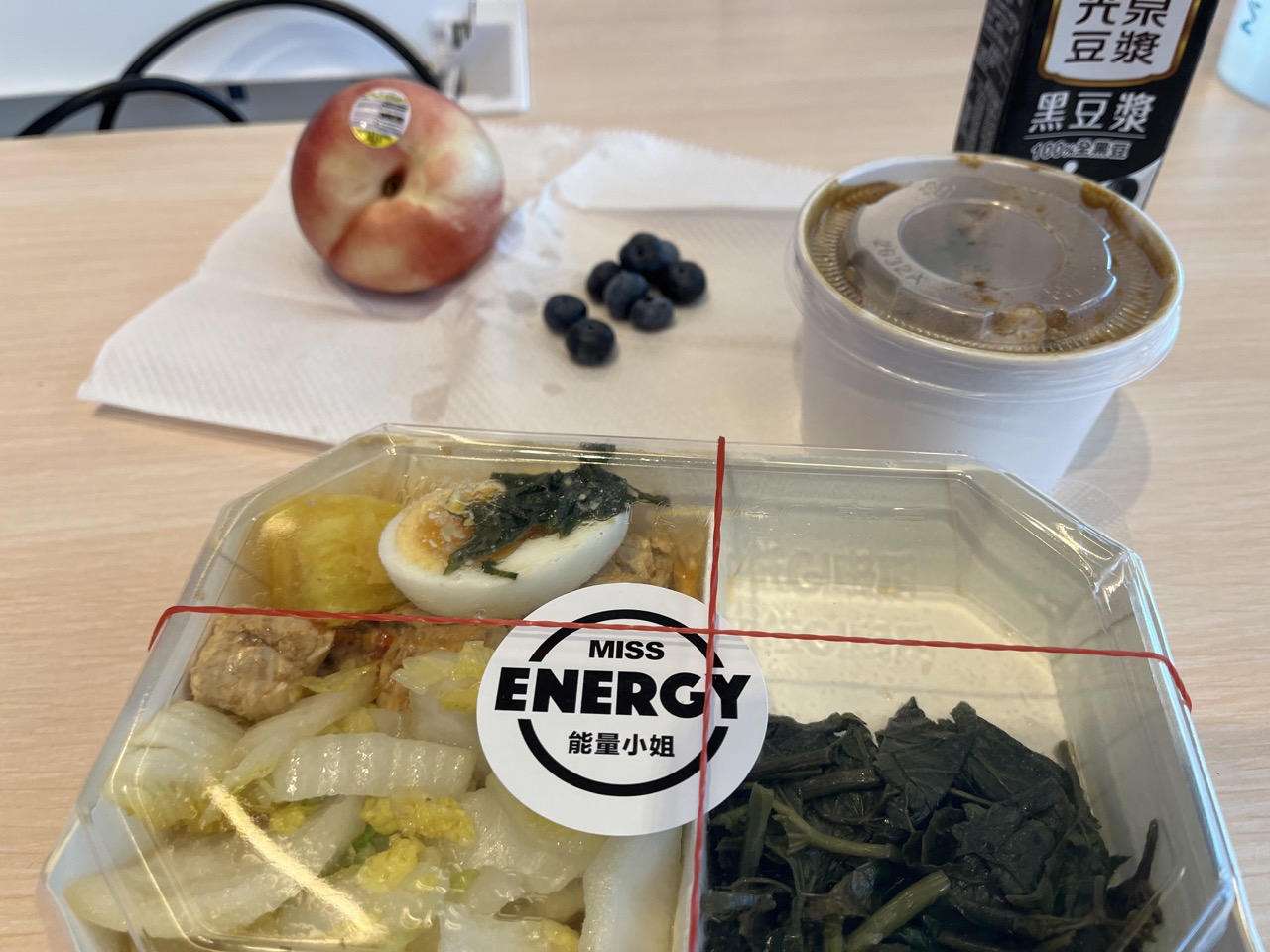

Lunch

Since the office is in Xinyi District, a lunch costing under 200 TWD is considered a steal. ~

Most of the time, when dining with the team, we would go to department stores.

Sometimes, we’d head towards City Hall for some local street food.

The office has a shelf full of drinks and some snacks, mostly small pouches.

I would grab a soy milk almost every day XD

Occasionally, there were fruits available (I’ve had bananas, blueberries, and peaches).

I often paired my healthy lunch boxes bought nearby with the office drinks and fruits. ~

Happy Hour

Every Friday afternoon, there’s a Happy Hour where you can order afternoon tea!

It could be drinks or snacks; it varies each time.

(One time it was waffles from Xiaomu, but I missed out since it was during a Team Building session. So unfortunate QQ)

Clubs

One day, a club shared how to make cocktails during lunch.

That day, we ordered a bunch of pizzas and Korean fried chicken!

Company Culture#

Every company has its own values.

Appier’s core values are:

- open-mindedness

- direct communications

- ambition

I also discussed different company cultures with my mentors during conversations.

This open-mindedness was evident during Design Review or Code Review sessions.

Mentors or supervisors would set a broad direction, leaving the technical details up to me to design and discuss with the team.

Team members would also provide feedback during reviews.

Technical Aspects#

Data Engineering#

This time, I mainly encountered new technologies related to Data Engineering.

There’s so much to share.

I’ve written an additional post to introduce it.

Infra and Dev#

At Appier,

managing the Helm Chart or Grafana Dashboard for the department’s own applications,

or the Infra used by applications like Postgres, Redis, Kafka, etc.,

falls within the scope of Backend.

There is no dedicated Infra team to help maintain these.

Infra is provided as a SaaS, such as:

ArgoCD with many convenient plugins,

managing or upgrading multiple Kubernetes clusters,

or EFK integrated with k8s for log tracing.

(There are many other services, but these are the ones I’ve interacted with so far.)

Compared to previous internships or part-time jobs,

this difference is mainly because Appier has more departments.

In previous companies, each product was essentially its own department,

and deployment or storage-related maintenance had dedicated Infra teams.

At Appier, the responsibilities of Backend are broader.

It requires a deeper understanding of the Infra used by our own applications

to handle production issues and find root causes.

Implementing Infra as Code#

Appier has many departments,

with dozens of Kubernetes clusters or Cloud SQL instances,

along with VPCs, IAM, Secrets, and so on.

At this scale, manual maintenance is no longer feasible.

Here, I truly experienced the benefits of Infra as Code.

It allows the modularization of repetitive resources,

and the Terraform in the repo should be consistent with the actual state of the instances.

When Cloud Infra reaches a certain scale,

IaC becomes a necessity.

Role of Senior Engineers#

- Review Code

- Cost Reduction

- Root Cause Analysis

- Sprint Planning

- Performance Tuning

These are areas that I found are not easy to engage with regularly,

and where the difference between myself and a Senior becomes apparent.

Previously, I mostly had the opportunity to work on performance improvements for OLTP.

However, here there are many opportunities to work on tuning for OLAP, streaming, or batch processing,

which are topics I had not encountered before.

Development Process#

Scrum and Sprint#

It seems that all departments follow Scrum,

and use Jira to manage Sprints.

For the Data Platform department where I’m currently working,

each Sprint lasts for 2 weeks.

At the beginning of each Sprint, there’s a Sprint Planning + Retro meeting,

and a Grooming session the following week.

There’s a Daily Standup every day

to go over the current status of the tickets.

However, there’s one designated No Meeting Day each week

to avoid scheduling meetings on that day as much as possible.

Design Review and Code Review#

If the current feature or refactor involves designing a new architecture,

a Design ticket will be created.

All team members are invited for a Design Review

to ensure there are no potential issues with the system design

and to consider the future scalability.

In our department, if a Code Review is relatively large,

a Code Review meeting is scheduled for everyone to review it together.

For minor fixes, it’s done directly through Slack with a request for approval.

It feels quite new to me, as I thought PR reviews were always done asynchronously. ~

GitOps and Release#

Every Wednesday, there’s a Release Meeting.

Service Owners also add their service updates to the Release Note.

Since deployment is done on Kubernetes using ArgoCD,

the meeting involves confirming with each Service Owner whether the corresponding PRs have been merged

and whether they want to release that week.

If there are issues during the release,

a rollback is done immediately.

For updates to external services,

stakeholders are notified via Slack.

About the Long-term Internship#

Since I completed the main summer internship project in the first week of August,

my mentor asked me which areas of the department I was interested in.

I felt that diving directly into the specifics of Data Engineering

(such as Hive, Spark, Airflow, etc.) would involve a significant learning curve.

I decided to start with the APIs managed by the department

(which, coincidentally, use FastAPI, a framework I am quite familiar with).

At that time, we were migrating to a new tech stack,

so I took the opportunity to migrate the legacy Flask API codebase

to a FastAPI monorepo.

I also used features from the new stack to improve the original API.

Just as I started the summer internship,

one of the long-term interns on the Data Team transitioned to a full-time role!

This opened up a headcount for a long-term internship.

At the end of August, when I saw that my class schedule was free on Mondays and Tuesdays,

and I wanted to finish migrating the refactored API,

I decided to stay on and continue as a long-term intern!